The Context Advantage: Why generic AI isn't enough

Generic LLMs lack visibility into your specific environment. They often provide long, irrelevant advice because they don't see your cluster and resource configurations.

AI Insights Beta: Context-Aware Kubernetes Troubleshooting

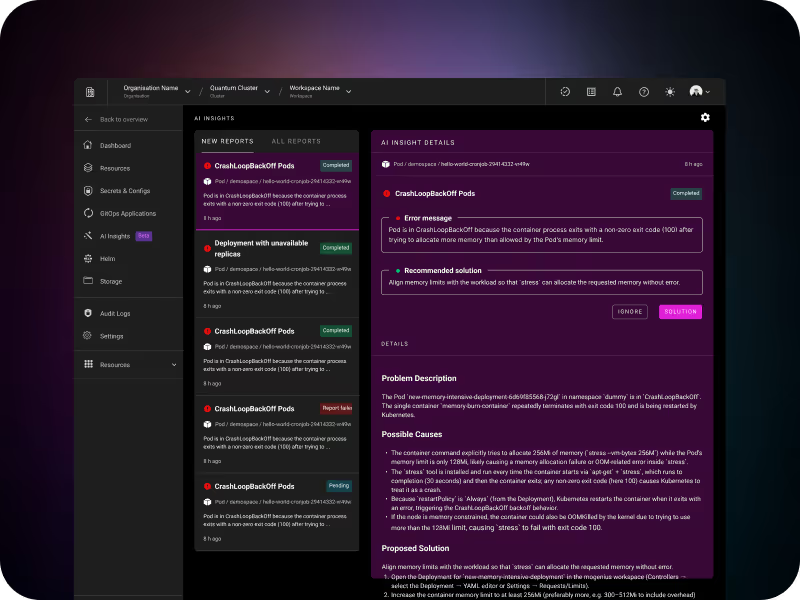

Move beyond generic AI suggestions with mogenius. Get precise root cause analysis and actionable fixes directly in your cluster management interface.

Generic LLMs lack visibility into your specific environment. They often provide long, irrelevant advice because they don't see your cluster and resource configurations.

AI Insights is built into the mogenius platform and automatically scans your cluster. You maintain full control over data, models, and costs.

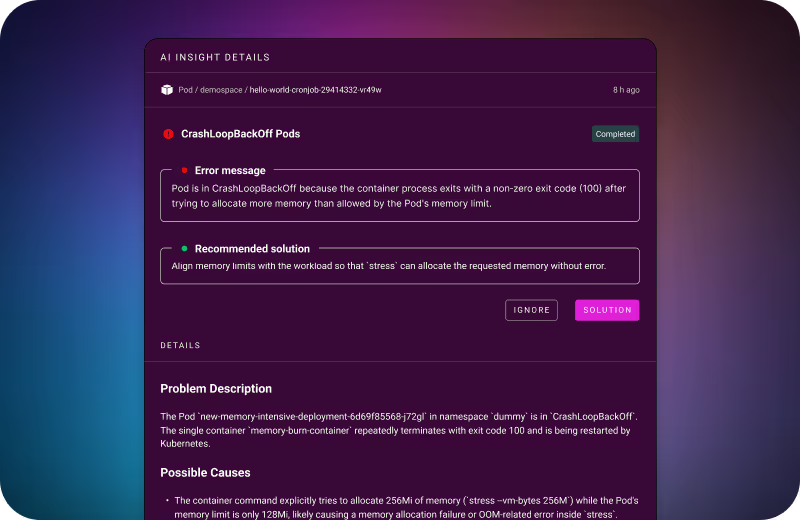

CrashLoopBackOff due to an OOMKilled (Out of Memory), the agent doesn't just see the error. It analyzes container logs and resource limits and explains the exact reason.

.avif)

Generic AI advice often adds more noise to an already complex environment. Let’s talk about how context-aware insights can specifically help your teams reduce MTTR (Mean Time to Recovery) and free up your DevOps experts for high-value platform engineering.

Yes. mogenius supports a Bring Your Own Model (BYOM) approach. You can connect your authorized enterprise endpoints, such as Azure OpenAI or self-hosted models, to ensure all data processing aligns with your internal security and compliance frameworks.

No. mogenius follows a "Human in the Loop" philosophy. The AI proposes a fix and generates the necessary YAML changes, but no action is taken without human approval. You maintain full control to review and authorize every deployment.

By automating the initial investigation phase, mogenius cuts troubleshooting time from hours to seconds. The AI Insights agent proactively monitors cluster health and delivers actionable reports to a centralized "Inbox," allowing platform teams to approve verified fixes instantly.

Security is handled through a secure Platform-Operator-Connection. With mogenius, you don't need to give individual developers direct cluster access; instead, you manage everything via Role-Based Access Control (RBAC) without exposing your clusters.

Absolutely. Platform Engineering leads can set daily token limits per cluster within the mogenius dashboard. This prevents unexpected costs and ensures that AI-driven troubleshooting stays within your allocated DevOps budget.

Most generic LLMs only see the error message you copy-paste. The mogenius AI Insights agent runs directly within your cluster. It doesn't just see a "CrashLoopBackOff"; it analyzes your specific YAML manifests, real-time logs, and resource limits to provide a pinpointed root cause analysis rather than generic suggestions.