How and When to Use Terraform with Kubernetes

.avif)

Terraform is an infrastructure as code tool that replaces the ClickOps method of defining, deploying, and managing infrastructure locally, on-premises, or in the cloud. Its declarative method of defining infrastructure lets you focus on the target state of the infrastructure rather than the steps needed to achieve that state, which makes managing infrastructure easier.

Terraform has its own declarative language called the Hashicorp configuration language (also called the Terraform language) for defining the infrastructure you want, and its command line tool makes it easy to perform operations on your infrastructure.

In this article, you'll learn how and when to use Terraform with Kubernetes with an example of a local nginx deployment.

When to Use Terraform with Kubernetes

Terraform is a platform-agnostic tool, so you can use it to manage your entire stack irrespective of the underlying frameworks, libraries, cloud platforms, etc. It enables multicloud and hybrid-cloud development by bringing all your infrastructure definitions into one place, which makes managing infrastructure, costs, and security easier.

Combining Terraform with your version control system and CI/CD pipeline also helps you automate infrastructure deployment. If you store your Terraform project in a Git repository, any changes to the project are accompanied by the output of the Terraform plan describing the changes that the PR would bring to the infrastructure. The reviewer can then approve the code changes, triggering a terraform apply -auto-approve command.

Using Kubernetes with Terraform lets you define all your infrastructure – such as networking, storage volumes, databases, security groups, firewalls, DNS, etc. – within the Terraform project. Terraform comes in handy when you're dealing with all the infrastructure in multicloud and hybrid-cloud environments, especially when you're managing dependencies between different Kubernetes objects and cloud resources.

Using Terraform with Kubernetes

Let's now look at how to use Terraform with Kubernetes by walking through an example of setting up two separate nginx clusters.

For simplicity, you'll be deploying the clusters on your local machine, which is great for development, but in a real-world scenario, the clusters will likely be hosted in the cloud.

Setting Up the Environment

To use Terraform with Kubernetes, you'll need the following tools installed on your system:

* Docker Engine: lays the foundation for deploying containers using Minikube on your local machine

* Minikube: acts as your local Kubernetes cluster, which you'll use to run separate nginx deployments and services

* kubectl CLI: lets you access Kubernetes resources – such as pods, deployments, and services – from your command line

* Terraform CLI : lets you work with a Terraform project from your command line (i.e. you can use this tool to plan, apply, and destroy infrastructure)

* Git : the version control system that lets you download and manage the source code for the module you'll be working with in this tutorial

Ensure Docker Engine and Minikube are up and running. Use the following commands to start Minikube:

You'll see an output resembling the following:

Make a note of the URL http://127.0.0.1:57560 as you'll need it later in this tutorial.

Check the status of your Docker machine by running the following command:

Lastly, clone this GitHub repository that contains the Terraform nginx module for this tutorial:

Though you can write all your Terraform code in a single .tf file, it's considered a bad practice for code management. In the repository, you'll find the code split into the following three files in the GitHub repository:

* main.tf: contains the definitions of the Kubernetes namespace, deployment, and service

* variables.tf : contains the definitions of Terraform variables, for which you'll provide values via parameters from the Minikube configuration file

* versions.tf: contains the definition of the Kubernetes provider, along with its source and version

You can find more information on how files and directories in the GitHub repo can be structured.

Configuring the Terraform Kubernetes Provider

This tutorial uses Hashicorp's official Kubernetes provider to create the two local Kubernetes clusters you'll be using.

When you run the terraform init command for the first time, the following snippet from the versions.tf file in the GitHub repository will import the provider module into your local Terraform project:

Creating and Configuring a Terraform nginx Module

To deploy nginx using Kubernetes and Terraform, you first need to create a Terraform nginx module. Terraform lets you create reusable modules when creating new resources in your infrastructure. Although creating modules is easy, Terraform generally advises using them in moderation, especially when they don't create a new abstraction to your application architecture.

You could follow Terraform's official tutorial to build your own module, but for this tutorial, you'll use the prebuilt terraform-nginx-k8s module from the GitHub repository. This module is based on the official Kubernetes provider from the Terraform registry.

Before deploying the terraform-nginx-k8s module, let's walk through how to configure its core components and how it defines various Kubernetes resources.

Creating a Kubernetes Namespace for Deploying Resources

You can use Kubernetes namespaces to manage clusters and subclusters individually. The module uses the following code to create a new namespace:

This creates a new kubernetes_namespace with the default name set as nginx, which you can override in your variables.tf file. Later in the tutorial, you'll use this code to create two namespaces, nginx-cluster-one-ns and nginx-cluster-two-ns, one for each Kubernetes cluster. In each of those clusters, you'll create nginx deployments.

Defining the nginx Deployment

Next, the module defines the nginx deployment using the kubernetes_deployment resource:

This resource takes arguments – such as the namespace, number of replicas, nginx version, CPU limit, and memory limit – from the variables.tf file. You can override the default variable definitions when you call the module. For example, when you import the module to configure the Kubernetes deployment later in the tutorial, you'll define some of the variables as follows:

This specifies the nginx version and the resources you want to provide the server.

Defining a Kubernetes Service for nginx

Finally, the module defines a Kubernetes service, an application that will run in your Kubernetes cluster behind a single outward-facing endpoint:

Deploying the Module and Applying the Configuration

Now that you've run through all the core components of the Kubernetes nginx module, you can deploy it and apply your own configuration.

First, define the two separate nginx clusters by placing the following snippet in a <custom_name>.tf file in a new directory of your choice:

This imports the module for both clusters and provides it with cluster-specific parameters for variables like the Kubernetes namespace, nginx version, CPU limit, memory limit, and service type, among other things. Make sure to update the kubernetes_client_certificate, kubernetes_client_key, and kubernetes_cluster_ca_certificate variables with the correct paths from your Minikube config file, which will be in the .minikube folder in the home directory where you installed Minikube.

Once you've updated the variables, run the following Terraform commands one after the other:

When you run the terraform apply command, you'll be asked to confirm the actions; type yes. Once you press enter, you'll see an output that resembles the following:

Once the deployment is complete, use the terraform show command to verify for yourself if the intended infrastructure was deployed. A sample output of the terraform show command can be found in this GitHub Gist.

Alternatively, use the kubectl CLI to get information about both your clusters and the resources deployed in them. Here's how you get all the resources deployed in the namespace nginx-cluster-one-ns:

If the second nginx cluster deployed successfully, you should get the same for nginx-cluster-two-ns.

This information is also available on the Kubernetes dashboard, as shown in the image below:

Scaling the nginx Deployment

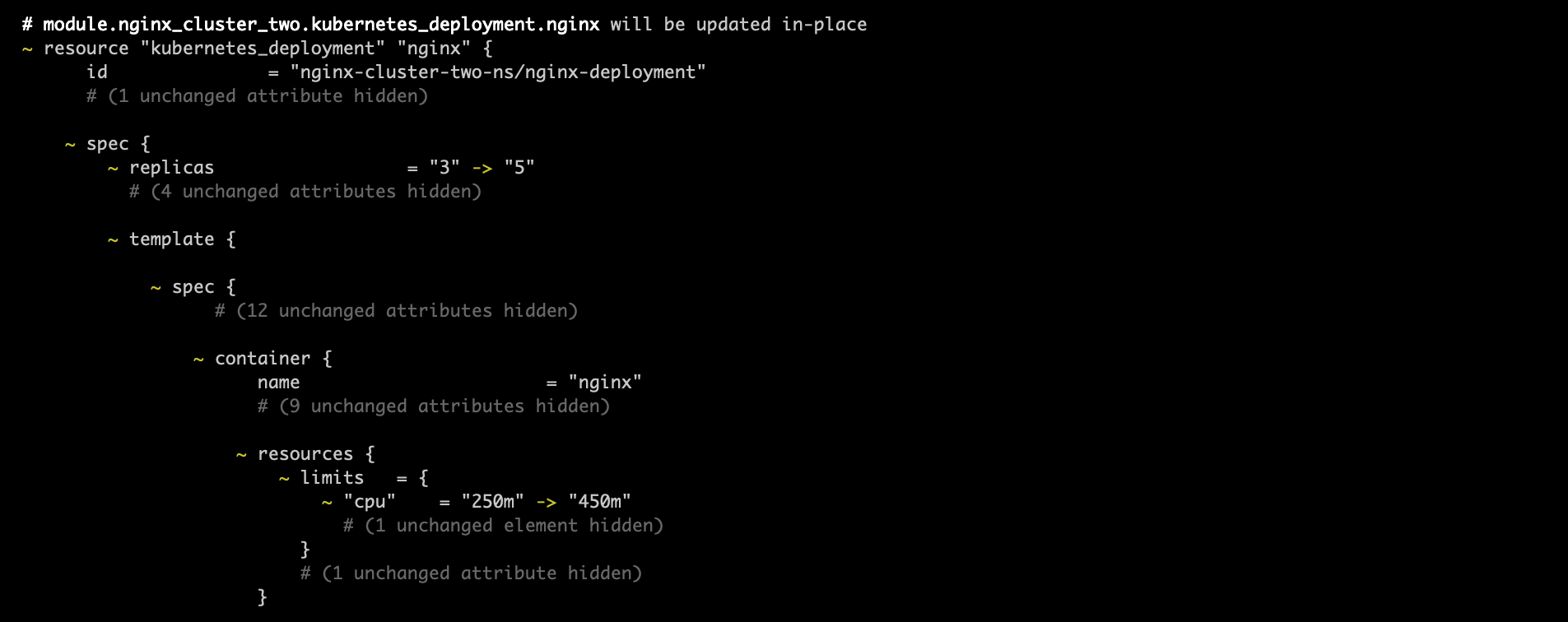

Scaling your nginx deployment is very straightforward in Terraform. To scale up the nginx deployment in this tutorial, simply go to the <custom_name>.tf file and make the following changes:

* In nginx_cluster_one, change the number of replicas from 2 to 4 and the CPU limit from 250m to 450m.

* In nginx_cluster_two, change the number of replicas from 3 to 5 and the CPU limit from 250m to 450m.

These changes allow your nginx deployments to address more requests because of the increased resources. After making these changes, your <custom_name>.tf should look something like the following:

Apply the changes using the terraform apply command:

After applying the infrastructure changes, run the kubectl get all -n nginx-cluster-one-ns command to confirm that you have four instead of two pods running in your cluster:

Managing Kubernetes Resources with Terraform

You now know how to set up and deploy nginx on two different Kubernetes clusters, and you can start exploring additional Kubernetes resources that help manage your infrastructure.

To better manage multiple deployments of the same resource, use a ConfigMap to inject configuration files and environment variables into your pods that nginx can read during startup. For example, a sample Kubernetes ConfigMap resource definition stores the nginx.conf file that can be loaded when nginx is first deployed:

Another important Kubernetes resource is kubernetes_ingress, which allows you to define rules for inbound connections to reach the endpoints defined by the backend. Here's an example from Terraform's official documentation of how to describe this resource in your Terraform project:

While these resources are not part of the GitHub repository you cloned at the beginning of the tutorial, you can add these resources to your module as per your requirements.

Conclusion

This article demonstrated some of the useful features of Terraform and how to use it to deploy nginx on Kubernetes using a module built with the official Kubernetes provider from the Terraform registry.

Kubernetes can be difficult to manage, especially when you're bringing changes made in local environments to production. Because it's difficult to mirror actual production setups, teams often face the infamous "works on my machine" dilemma.

mogenius helps tackle this challenge by enabling you to create self-service Kubernetes environments with enhanced visibility. With a unified view of both application and infrastructure components, simplified Kubernetes interaction, and guided troubleshooting, mogenius supports better local testing for developers. It also allows you to integrate seamlessly with CI/CD pipelines, configure external domains, manage SSL, set up load balancing, and handle certificate management. This approach enhances the developer experience, particularly in cloud-agnostic or multicloud environments.

FAQ

Interesting Reads

The latest on DevOps and Platform

Engineering trends

Subscribe to our newsletter and stay on top of the latest developments

.avif)